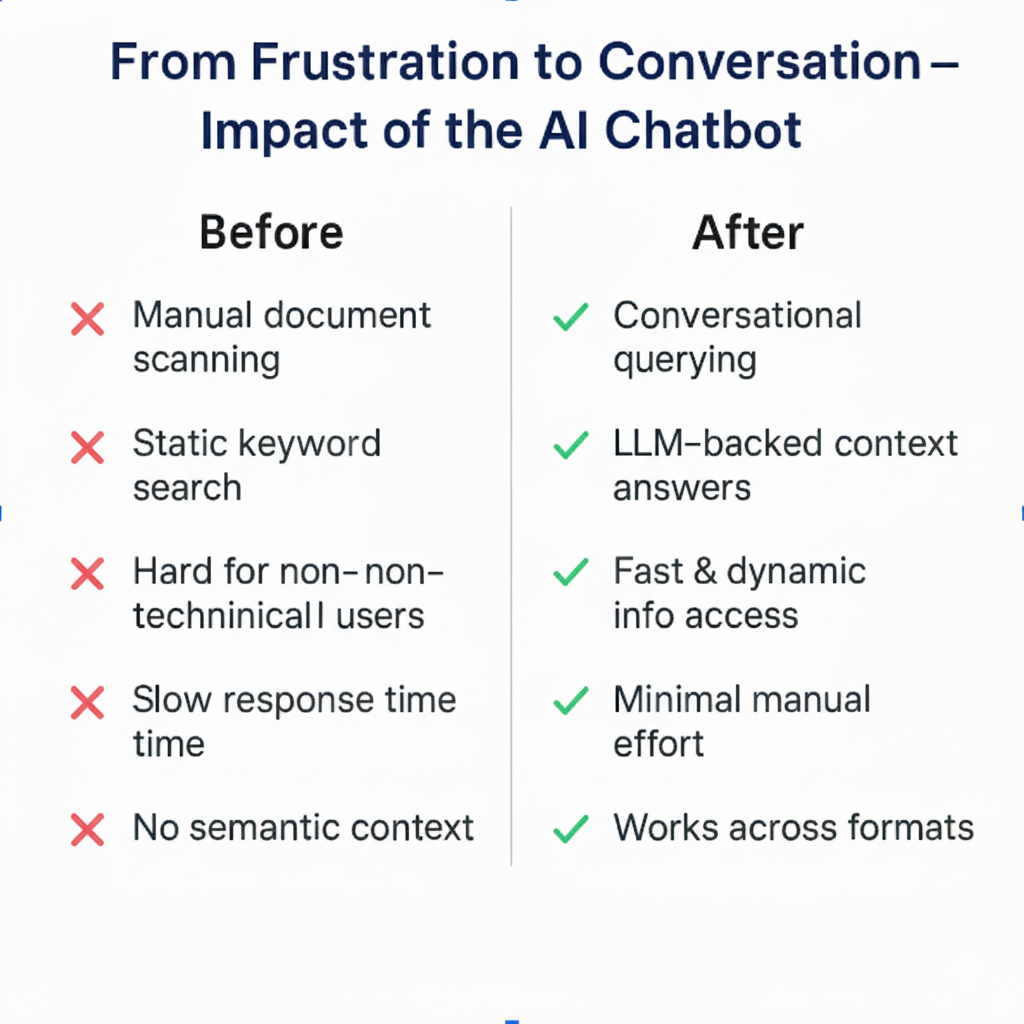

A mid-sized SaaS company specializing in HR and payroll management faced a growing barrier: their support and operations teams struggled to quickly extract key information from a maze of internal documents: policy PDFs, compliance guides, and technical manuals. Manual searching and frequent escalations to subject matter experts slowed down resolutions and increased workloads.

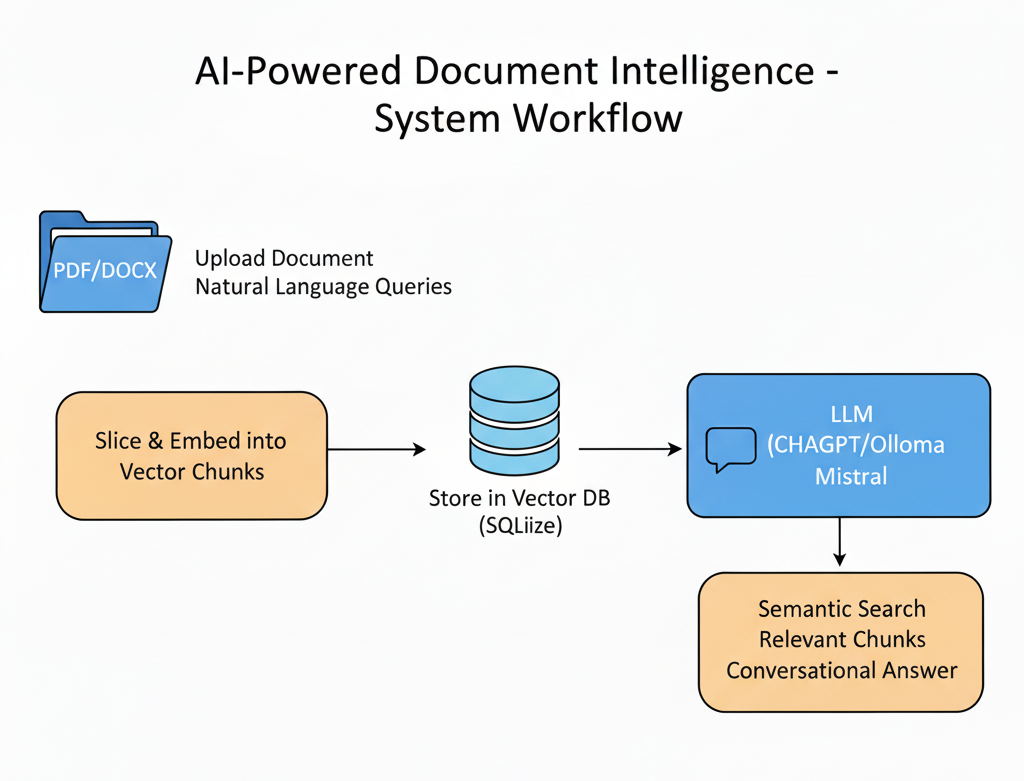

TechTez was brought in to develop an AI-powered chatbot that could extract context-aware answers from unstructured documents using natural language. The solution now powers internal support workflows across multiple departments.

Enterprise teams are often buried under static, unstructured documents in a variety of formats (PDF, DOCX, etc.). Retrieving accurate, contextual answers is slow, labor-intensive, and not scalable, especially when answers are scattered across numerous files.

Key obstacles included: